Chinese AI startup DeepSeek released Prover V2, an updated artificial intelligence system designed to solve mathematical proofs and theorems.

The update was made available via Hugging Face, a well-known AI development platform.

About Prover V2 and Its Development

Prover V2 builds on DeepSeek’s V3 model, which contains 671 billion parameters and uses a mixture-of-experts (MoE) architecture. This design divides tasks into specialized subtasks to improve problem-solving efficiency.

The AI model focuses on formal theorem proving and mathematical reasoning, fields where DeepSeek has gained recognition for advancing AI capabilities.

Significance of the Update

DeepSeek’s V3 model powers Prover V2, enhancing its ability to tackle complex mathematical problems through advanced AI techniques. The mixture-of-experts system significantly contributes to these improved outcomes.

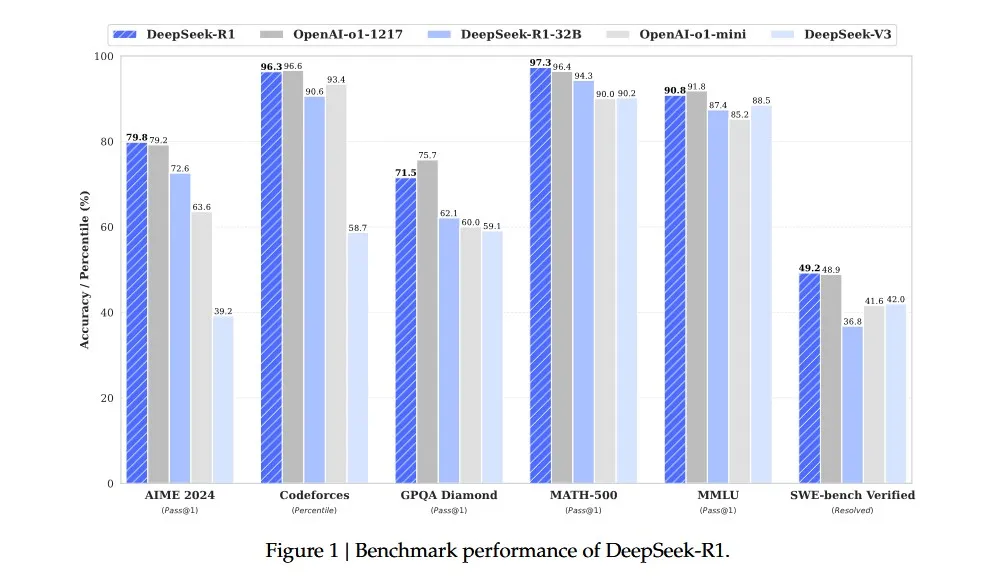

Previously, DeepSeek’s R1 model scored a 79.8% pass rate on the 2024 American Invitational Mathematics Examination (AIME), outperforming similar models from competitors. This performance underscored the company’s role in advancing mathematical AI reasoning.

Key Facts at a Glance

| Aspect | Details |

|---|---|

| Developer | DeepSeek, Chinese AI startup |

| Model Name | Prover V2 |

| Release Date | April 30, 2025 |

| Platform | Hugging Face |

| Architecture | Mixture-of-experts (MoE) with 671B parameters |

This release follows DeepSeek’s commitment to refining AI models for specialized tasks, a strategy that supports advancements in mathematical problem-solving AI.

In February 2025, reports indicated the company considered raising outside investment for the first time. Continued innovations like Prover V2 may enhance DeepSeek’s competitive position in AI development.

These developments highlight the rising importance of AI in mathematical reasoning and the broader field of AI advancements.

Leave a Reply